Abstract

Drawing inspiration from psychology, computer vision

and machine learning, the team in the Computer Laboratory at the

University of Cambridge has developed mind-reading machines - computers

that implement a computational model of mind-reading to infer mental

states of people from their facial signals. The goal is to enhance

human-computer interaction through empathic responses, to improve the

productivity of the user and to enable applications to initiate

interactions with and on behalf of the user, without waiting for

explicit input from that user.

Description of Mind-Reading Computer

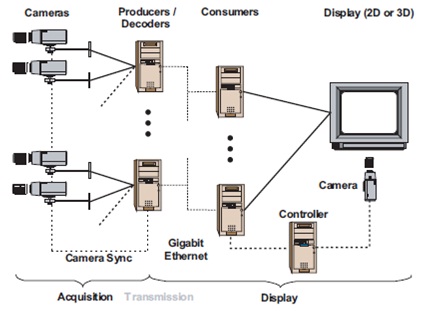

Using a digital video camera, the mind-reading computer system analyzes a person's facial expressions in real time and infers that person's underlying mental state, such as whether he or she is agreeing or disagreeing, interested or bored, thinking or confused.

Prior knowledge of how particular mental states are expressed in the face is combined with analysis of facial expressions and head gestures occurring in real time. The model represents these at different granularities, starting with face and head movements and building those in time and in space to form a clearer model of what mental state is being represented. Software from Nevenvision identifies 24 feature points on the face and tracks them in real time. Movement, shape and colour are then analyzed to identify gestures like a smile or eyebrows being raised. Combinations of these occurring over time indicate mental states. For example, a combination of a head nod, with a smile and eyebrows raised might mean interest. The relationship between observable head and facial displays and the corresponding hidden mental states over time is modeled using Dynamic Bayesian Networks.

Why mind reading?

The mind-reading computer system presents information about your

mental state as easily as a keyboard and mouse present text and

commands. Imagine a future where we are surrounded with mobile phones,

cars and online services that can read our minds and react to our moods.

How would that change our use of technology and our lives? We are

working with a major car manufacturer to implement this system in cars

to detect driver mental states such as drowsiness, distraction and

anger. Current projects in Cambridge are considering further inputs such as body posture and gestures to improve the inference. We can then use the same models to control the animation of cartoon avatars. We are also looking at the use of mind-reading to support on-line shopping and learning systems.

The mind-reading computer system may also be used to monitor and suggest improvements in human- human interaction. The Affective Computing Group at the MIT Media Laboratory is developing an emotional-social intelligence prosthesis that explores new technologies to augment and improve people's social interactions and communication skills.

Web search

For the first test of the sensors, scientists trained the software program to recognize six words - including "go", "left" and "right" - and 10 numbers. Participants hooked up to the sensors silently said the words to themselves and the software correctly picked up the signals 92 per cent of the time.

Then researchers put the letters of the alphabet into a matrix with each column and row labeled with a single-digit number. In that way, each letter was represented by a unique pair of number co-ordinates. These were used to silently spell "NASA" into a web search engine using the program.