Three-dimensional TV is expected to be the next revolution in the TV

history. They implemented a 3D TV prototype system with real-time

acquisition transmission, & 3D display of dynamic scenes. They

developed a distributed scalable architecture to manage the high

computation & bandwidth demands. 3D display shows high-resolution

stereoscopic color images for multiple viewpoints without special

glasses. This is first real time end-to-end 3D TV system with enough

views & resolution to provide a truly immersive 3D experience.Japan

plans to make this futuristic television a commercial reality by 2020as

part of abroad national project that will bring together researchers

from the government, technology companies and academia. The targeted

"virtual reality" television would allow people to view high

definitionimages in 3D from any angle, in addition to being able to

touch and smell the objects being projected upwards from a screen to the

floor.

The evolution of visual media such as cinema and television is one of the major hallmarks of our modern civilization. In many ways, these visual media now define our modern life style. Many of us are curious: what is our life style going to be in a few years? What kind of films and television are we going to see? Although cinema and television both evolved over decades, there were stages, which, in fact, were once seen as revolutions:

1) at first, films were silent, then sound was added;

2) cinema and television were initially black-and-white, then color was introduced;

3) computer imaging and digital special effects have been the latest major novelty.

BASICS OF 3D TV

2. Transmission

3. Display Unit

The evolution of visual media such as cinema and television is one of the major hallmarks of our modern civilization. In many ways, these visual media now define our modern life style. Many of us are curious: what is our life style going to be in a few years? What kind of films and television are we going to see? Although cinema and television both evolved over decades, there were stages, which, in fact, were once seen as revolutions:

1) at first, films were silent, then sound was added;

2) cinema and television were initially black-and-white, then color was introduced;

3) computer imaging and digital special effects have been the latest major novelty.

BASICS OF 3D TV

Human gains three-dimensional information from

variety of cues. Two of the most important ones are binocular parallax

& motion parallax.

A. Binocular Parallax

It means for any point you fixate the images on

the two eyes must be slightly different. But the two different image so

allow us to perceive a stable visual world. Binocular parallax defers

to the ability of the eyes to see a solid object and a continuous

surface behind that object even though the eyes see two different views.

B. Motion Parallax

It means information at the retina caused by

relative movement of objects as the observer moves to the side (or his

head moves sideways). Motion parallax varies depending on the distance

of the observer from objects. The observer's movement also causes

occlusion (covering of one object by another), and as movement changes

so too does occlusion. This can give a powerful cue to the distance of

objects from the observer.

C. Depth perception

It is the visual ability to perceive the world

in three dimensions. It is a trait common to many higher animals. Depth

perception allows the beholder to accurately gauge the distance to an

object. The small distance between our eyes gives us stereoscopic depth

perception[7]. The brain combines the two slightly different images into

one 3D image. It works most effectively for distances up to 18 feet.

For objects at a greater distance, our brain uses relative size and

motion As shown in the figure, each eye captures its own view and the

two separate images are sent on to the brain for processing. When the

two images arrive simultaneously in the back of the brain, they are

united into one picture. The mind combines the two images by matching up

the similarities and adding in the small differences. The small

differences between the two images add up to a big difference in the

final picture ! The combined image is more than the sum of its parts. It

is a three-dimensional stereo picture.

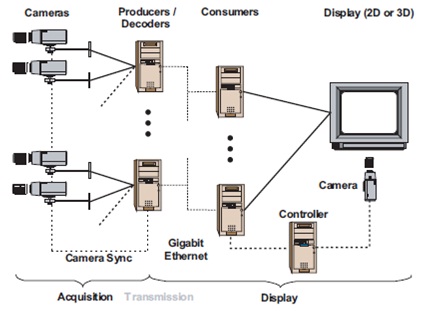

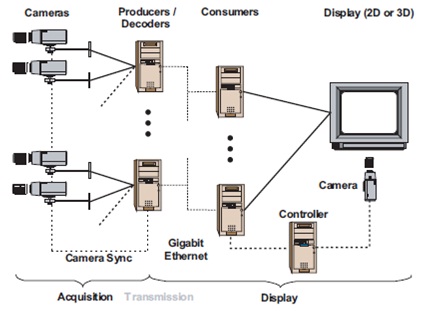

The whole system consists mainly three blocks:

1 Aquisition2. Transmission

3. Display Unit

A. Acquisition

The acquisition stage consists of an array of hardware-synchronized cameras. Small clusters of cameras are connected to the producer PCs. The producers capture live, uncompressed video streams & encode them using standard MPEG coding. The compressed video then broadcast on separate channels over a transmission network, which could be digital cable, satellite TV or the Internet.

Generally they are using 16 Basler A101fc color cameras with 1300X1030, 8 bits per pixel CCD sensors.

The acquisition stage consists of an array of hardware-synchronized cameras. Small clusters of cameras are connected to the producer PCs. The producers capture live, uncompressed video streams & encode them using standard MPEG coding. The compressed video then broadcast on separate channels over a transmission network, which could be digital cable, satellite TV or the Internet.

Generally they are using 16 Basler A101fc color cameras with 1300X1030, 8 bits per pixel CCD sensors.

1) CCD Image Sensors: Charge coupled devices are

electronic devices that are capable of transforming a light pattern

(image) into an electric charge pattern (an electronic image).

2) MPEG-2 Encoding: MPEG-2 is an extension of

the MPEG-1 international standard for digital compression of audio and

video signals. MPEG-2 is directed at broadcast formats at higher data

rates; it provides extra algorithmic 'tools' for efficiently coding

interlaced video, supports a wide range of bit rates and provides for

multichannel surround sound coding. MPEG- 2 aims to be a generic video

coding system supporting a diverse range of applications. They have

built a PCI card with custom programmable logic device (CPLD) that

generates the synchronization signal for all the cameras. So, what is

PCI card?

3) PCI Card:

There's one element the bus. Essentially, a bus

is a channel or path between the components in a computer. We will

concentrate on the bus known as the Peripheral Component Interconnect

(PCI). We'll talk about what PCI is, how it operates and how it is used,

and we'll look into the future of bus technology.

All 16 cameras are individually connected to the

card, which is plugged into the one of the producer PCs. Although it is

possible to use software synchronization, they consider precise

hardware synchronization essential for dynamic scenes. Note that the

price of the acquisition cameras can be high, since they will be mostly

used in TV studios. They arranged the 16 cameras in regularly spaced

linear array

3D DISPLAY

3This is a brief explanation that we hope

sorts out some of the confusion about the many 3D display options that

are available today. We'll tell you how they work, and what the relative

tradeoffs of each technique are. Those of you that are just interested

in comparing different Liquid Crystal Shutter glasses techniques can

skip to the section at the end. Of course, we are always happy to answer

your questions personally, and point you to other leading experts in

the field[4]. Figure shows a diagram of the multi-projector 3D displays

with lenticular sheets.

They use 16 NEC LT-170 projectors with 1024'768

native output resolution. This is less that the resolution of acquired

& transmitted video, which has 1300'1030 pixels. However, HDTV

projectors are much more expensive than commodity projectors. Commodity

projector is a compact form factor. Out of eight consumer PCs one is

dedicated as the controller. The consumers are identical to the

producers except for a dual-output graphics card that is connected to

two projectors. The graphic card is used only as an output device. For

real-projection system as shown in the figure, two lenticular sheets are

mounted back-to-back with optical diffuser material in the center. The

front projection system uses only one lenticular sheet with a retro

reflective front projection screen material from flexible fabric mounted

on the back. Photographs show the rear and front projection

No comments:

Post a Comment